Complete Exclusive n8n Workflow Guide : Real-World Use Case, Setup, and Beginner Learning Path – Ahsan Taz

Exploring n8n Workflow AI Automation in Finance: From News Aggregation to Agentic Workflows

It’s been a while since my last post—things have been busy, especially with the rapid advancements in AI technologies like large language models (LLMs), multi-modal AI, and more.

But here’s the thing: AI is not my core job. My work revolves around corporate Marketing—not investment banking, portfolio optimization, or advanced quantitative modeling. It’s the essential, hands-on work of ensuring accurate financial data and delivering reports for both internal decision-making and external compliance.

In this field, creativity is often a liability rather than an asset (with a few exceptions, of course). What truly matters is:

- Traceability

- Reliability

- Efficiency

- System reconciliation (because tool fragmentation is inevitable over time)

Unfortunately, these are not the strong points of today’s LLMs or text-to-image models, which excel at pattern recognition and creative output rather than strict accuracy and compliance.

Why Agentic AI Matters for Finance

The agentic AI approach—where intelligent agents solve tasks under defined workflows—has always intrigued me. And as of now, it seems clear that agentic systems will dominate the AI conversation in 2025.

Why? Because they strike the right balance:

- Leveraging LLM creativity for problem-solving

- Maintaining control through structured workflows and compliance rules

Of course, there’s an ongoing debate: “Are workflows truly agents?” My take: in finance, full agent autonomy is unlikely anytime soon. Regulatory frameworks, accountability standards, and compliance requirements will always demand human oversight—especially in sensitive areas like financial reporting and healthcare systems.

My Experiment: Building a Real AI-Powered Use Case

This led me to a question:

Can I design a real-world use case that combines AI capabilities with automation to deliver measurable results—across multiple IT systems—without requiring full agent autonomy?

Here’s what I came up with:

The Use Case: Automating Knowledge Acquisition and Content Creation – Ahsan Taz

- Aggregate Newsletter Content into a Knowledge Base

- Goal: Automate the process of collecting insights from AI-related newsletters into a Notion database for easy reference.

- Turn Aggregated Knowledge into Actionable Output

- Next, use an agentic automation workflow to process the data and create an automated LinkedIn post summarizing the latest AI trends.

- Add complexity: Translate the post into German before publishing—a task that would have been almost impossible to automate a few years ago.

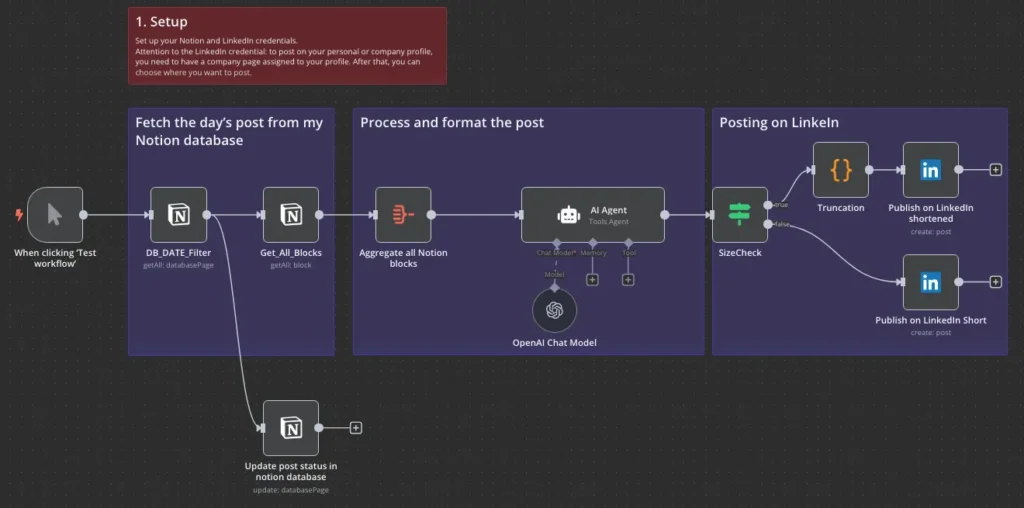

Below is the sequence diagram illustrating this end-to-end workflow. Key components include:

- IMAP for retrieving newsletters

- n8n as the automation engine

- Notion as the data repository

(More details on the setup later.)

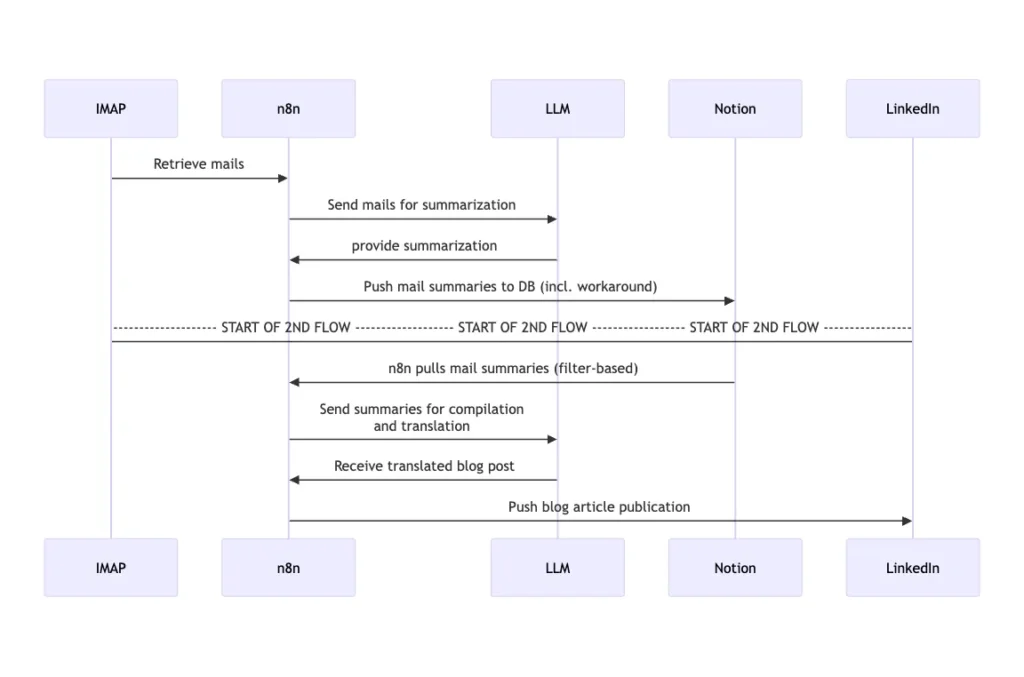

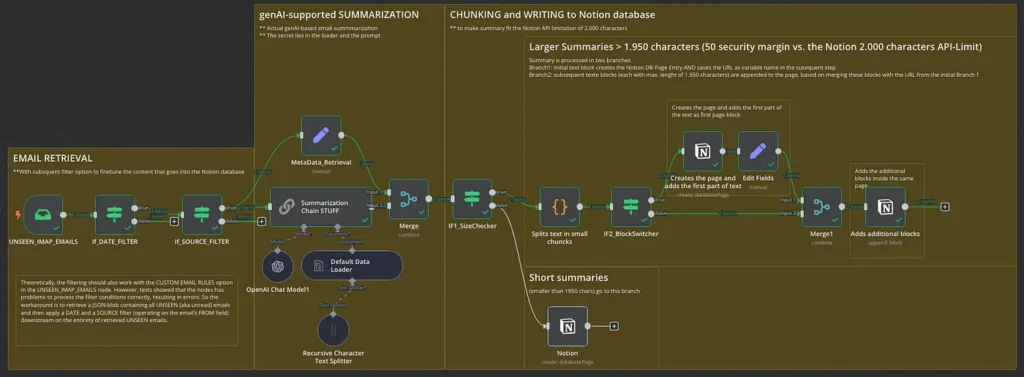

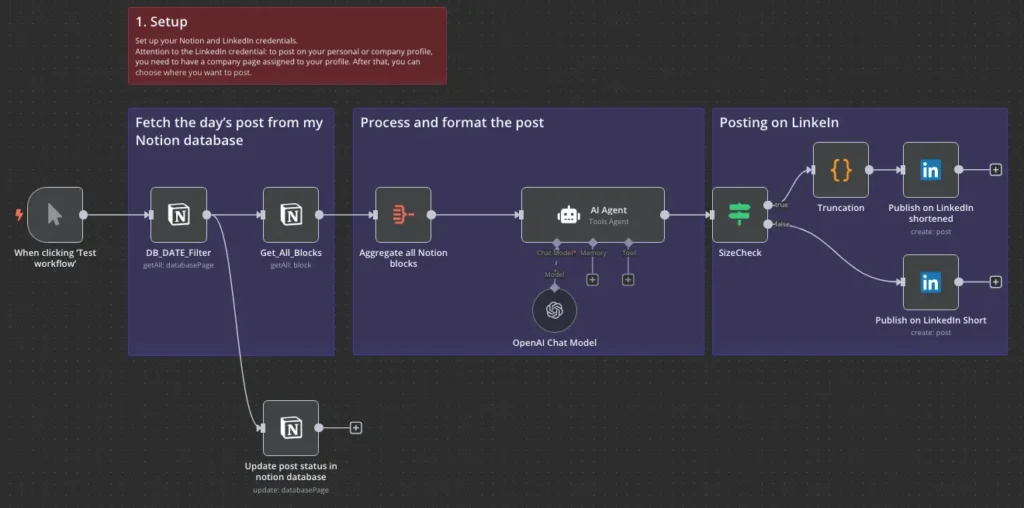

As mentioned earlier, this first part won’t dive into the technical details of the two workflows just yet. However, as a preview, here are the screenshots showcasing both workflows that bring the above sequence diagram to life. A detailed explanation of these workflows will follow in Parts 2 and 3 of this series.

And the other one is:

The Use Case: Why Does It Matter?

When addressing the “Why?” question, it breaks down into two core perspectives:

Why? The Learning Intent

The purpose of this Proof of Concept (PoC) was driven by multiple objectives:

- Learn by doing: Gain hands-on experience with an automation tool.

- Enable agentic workflows: Ensure the chosen tool can flexibly leverage cutting-edge AI capabilities.

- Tackle modern challenges: Use translation as a stand-in for a complex problem that, until recently, was difficult to automate—but can now be solved through the right combination of AI agents and prompts.

- Bridge fragmented systems: Demonstrate interaction between multiple platforms—automation tool, Notion database, and LinkedIn—reflecting typical IT landscape challenges.

- Deliver practical outcomes:

- Stay current with rapidly evolving fields like AI (though this approach applies to other domains too).

- Convert insights into actionable outputs, such as LinkedIn posts summarizing key updates or a continuously refreshed corporate KPI dashboard.

Why? The Learning Journey

If you’re only interested in the technical details, feel free to skip ahead to Part 2 and Part 3 of this series.

However, this section covers the systems, components, and setup required to get n8n running effectively.

For everyone else: the reason it’s called a Learning Journey is simple—the journey itself is the destination. My goal was to explore how modern AI advancements could be put to real-world use in a corporate environment.

After extensive reading, I revisited an old adage:

- You remember 10% of what you read, 50% of what you do, and 90% of what you teach.

Recently, I came across a great visualization on LinkedIn illustrating the process of building AI-driven apps (focused on AI agents), which resonated with this principle. I’ll share it here because it captures the essence perfectly.

This approach doesn’t perfectly align with my use case—some points simply don’t apply (for example, “Start a platform or community” isn’t relevant here). And while I usually lean toward Python, this particular project pushed me to adapt and work with JavaScript instead.

But here’s the essence: to truly learn, I wanted to get hands-on with a real project. Hands-on with data. Hands-on with (low) code. Because it’s in the engine room that you understand how the engine actually works.

After fumbling my way to success with the described use case, I realized it would be valuable to summarize the entire learning experience. Not just to reinforce the extra 40% of knowledge that comes from teaching what you’ve learned—but also to share it with others, because practical n8n tutorials that go beyond the basics are hard to find on Medium. That’s my motivation for writing this piece.

One interesting side note: as fascinated as I am by AI, I believe it’s still worth the effort to sit down and synthesize your own thoughts. So everything you’ll read here is 100% AI-free—purely my own reflections, not generated by an LLM.

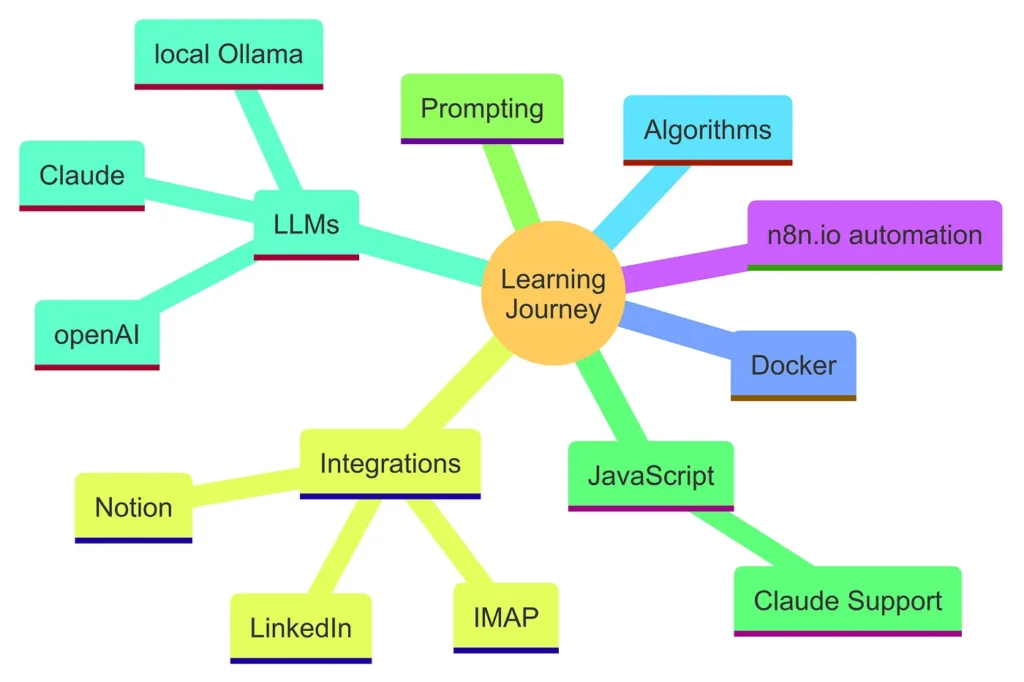

Long story short: the graphic below summarizes all the key topics I explored during this journey. These areas made sure the time invested in this project was absolutely worth it.

What I Learned: A Quick Overview

I’ll dive deeper into each branch of the mind map in later parts of this series. For now, here’s a quick overview of the key areas I explored during my learning journey:

n8n.io Automation

Getting hands-on with n8n, the core automation tool used in this project. Understanding how its workflows, nodes, and integrations work was essential for building a scalable solution.

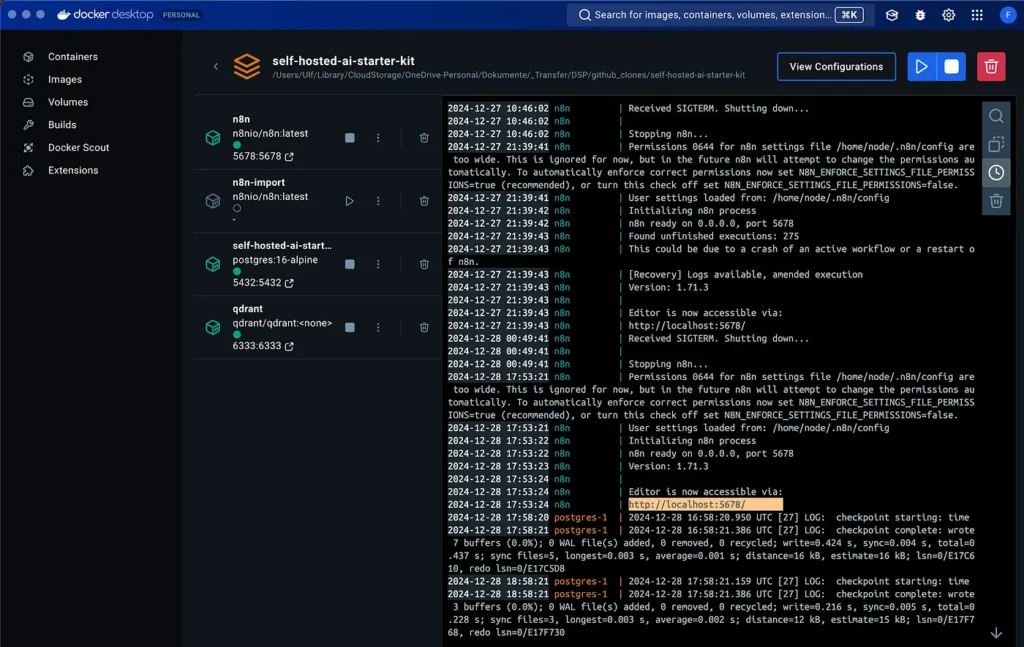

Docker

The environment powering n8n’s AI Starter Kit runs on Docker—and learning this tool turned out to be a valuable investment.

Why? Because if you’re serious about getting your hands dirty with data and low-code, Docker is now a must-have skill.

Before this project, I thought Docker was something only for hardcore techies managing complex cloud deployments. What I discovered:

- It has become a de facto standard for many modern services.

- It offers a user-friendly desktop version, making it accessible even for non-specialists.

- Many innovative frameworks now ship as Dockerized solutions, making it well worth learning.

JavaScript

Coming from Python, this was new territory. Python integration with n8n is still in beta, so I switched to JavaScript for this project.

The good news? You don’t need to be an expert coder anymore to get things done—especially with the help of Claude, which excels at free coding support.

With a bit of transfer learning from Python and the right prompts, I managed all the required code adjustments successfully.

Integrations

A key objective of this project was to simulate a fragmented corporate IT landscape by connecting multiple platforms.

The workflows had to integrate:

- Notion (as a database)

- LinkedIn (for publishing)

- IMAP mail server (for communication)

n8n made this possible, giving me practical experience in orchestrating systems that usually operate in silos.

LLMs

Some n8n automation features rely on AI Nodes, which in turn connect to LLMs.

This project gave me hands-on experience with different LLMs via API and helped me evaluate their performance in real automation scenarios.

Prompt Engineering

Several workflow steps relied on AI nodes or Agent nodes, making prompt design critical. Prompting has evolved into an art form—and this project gave me a chance to refine that skill.

Workflow Logic & Algorithms

I started with standard workflows as a base, but quickly learned that real-world needs require custom adjustments.

For example, there’s a big difference between:

- A live workflow that continuously processes updates

- A batch mode workflow that runs on-demand

These distinctions required significant tweaks beyond “plain vanilla” templates.

Why This Tool Stack?

Tool Choice: n8n.io

n8n strikes a unique balance between:

- Process automation (think Zapier, Make.com)

- AI agent frameworks (like LangGraph, CrewAI, Flowise, AutoGen)

I was looking for a solution that combined automation power with AI flexibility—and n8n fit that sweet spot.

Why n8n stood out:

- Offers a free AI Starter Kit, packaged in Docker

- Free for basic use, affordable at scale

- Allows you to integrate AI agents into automation for advanced flexibility

Tool Choice: Docker

This wasn’t an intentional choice at first, but I’m glad I encountered it.

Docker has evolved from being “for techies” into an industry-standard tool with a great desktop experience for beginners.

Learning Docker opens doors because many modern solutions—including AI frameworks—are now shipped as Docker containers.

If You’re New to Docker

Think of Dockerization as the next level of creating environments when coding in Python. In Python, you might create a virtual environment with specific packages and dependencies. Docker does something similar—but on a much larger scale.

Here’s the key difference:

Docker doesn’t just create a package configuration; it creates an entire mini-computer system inside your system. That’s why it’s so powerful for cloud deployment:

- You can quickly deploy Docker containers on a running server.

- Scaling up or down becomes fast and efficient.

- Each container is self-contained, bringing everything it needs for the job.

- This self-containment makes it secure and portable, as nothing leaks beyond its boundary.

Docker Basics in Simple Terms

- Docker Images: Think of these as class definitions in object-oriented programming. They serve as blueprints.

- Docker Containers: These are the instances of the class, meaning the actual running systems based on the image.

- YAML Files: These descriptive configuration files define how containers are built and how services work together.

- Multiple Services: Often, a container needs several services—for example, a database for storing workflow data. These are defined and orchestrated through Docker Compose YAML files.

So, in short:

- Image = Blueprint

- Container = Running instance

This analogy might make a Docker expert cringe a bit, but for beginners, it’s a helpful way to understand the concept.

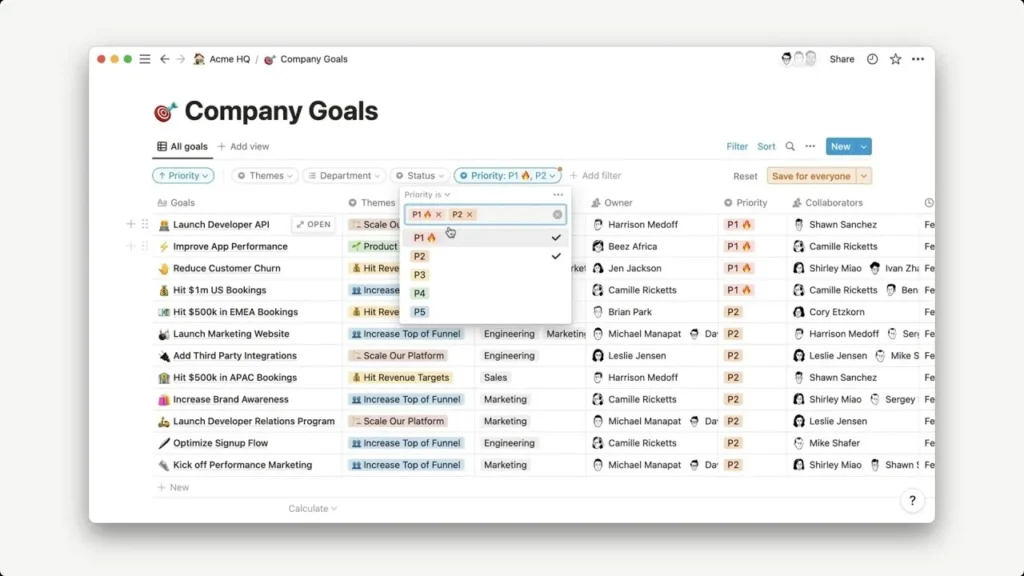

Tool Choice: Notion

I needed a way to store retrieved and summarized newsletter data in a database that I could also access outside the n8n workflow. n8n offers many connectors and database options, but here’s the catch:

- Free local databases are often ephemeral (data disappears when the container stops).

- Online databases usually require API keys, paid plans, and often lack a user-friendly interface.

That’s why Notion was the natural choice for me. I’ve been using it for years, and it’s now a major player in the productivity tool space. Its database functionality is simple yet effective, and combined with n8n’s built-in Notion integration, it provided:

- Ease of setup

- Permanent storage

- An intuitive interface for viewing and managing data outside the automation workflow

Tool Choice: LinkedIn

I’m certainly not the first person to share newsletter summaries on LinkedIn. The difference here is the full automation of the process. Instead of manually copying newsletter content into ChatGPT, Claude, or another LLM to generate summaries, and then manually creating LinkedIn posts, I wanted an end-to-end automated workflow.

Beyond automation, there were additional motivations:

1. Adding Translation for Accessibility

Most AI-related content is published in English. This creates an information bubble—while those of us reading this likely believe AI will transform the way we work and live, many people worldwide are still unaware of its impact.

Language is a major barrier. Non-English speakers often struggle to keep up with developments in AI. By translating summaries into German, I aimed to make important insights more accessible and useful to a broader audience.

2. Proving Multi-System Integration

Connecting to LinkedIn wasn’t just about posting—it was a Proof of Concept (PoC) for linking multiple cloud-based IT services through a single automation tool. The same principle applies to the Notion integration in this workflow, showing how to bridge a fragmented IT landscape with n8n.

3. Moving From “Talking AI” to “Doing AI”

LinkedIn is full of jokes about the ratio of people talking about AI versus those building with AI. This project was my way of contributing to the latter group.

Why This Use Case Works

Automating AI-driven content to write about AI might sound self-referential—but adding translation makes the output genuinely useful. It helps democratize access to cutting-edge information.

More importantly, this approach is domain-agnostic. The same workflow could be applied to:

- Any industry where regular newsletters provide value.

- Any use case where aggregated updates can be turned into posts, dashboards, or reports.

Not working with newsletters? Swap the IMAP email retrieval with RSS feeds or a web scraping component—and you’re good to go.

From Theory to Practice: The Technical Setup

This article won’t go into extreme detail about the setup, but I’ll share key resources and a high-level overview of what’s needed.

Prerequisites

To replicate this project, you need:

- Docker installed and running

- n8n AI Starter Kit configured as a Docker container

- A mail inbox (IMAP-enabled) subscribed to relevant newsletters

- A Notion database prepared for storing summaries

- LinkedIn API access for automation

Docker on Mac

Install Docker Desktop for Mac (or Windows) using this guide:

Getting Started with Docker Desktop

n8n AI Starter Kit

All required files are available on n8n’s GitHub repository:

Self-Hosted AI Starter Kit on GitHub

How to host on Docker:

n8n Docker Installation Guide

Highly recommended tutorial video:

YouTube: Step-by-Step Setup

Mac users:

If you plan to run Ollama locally, note these considerations:

- Running Ollama inside the container is possible but not recommended—it prevents GPU acceleration on Apple Silicon.

- The better option: run Ollama locally on your Mac and connect it from n8n using: arduinoCopyEdit

http://host.docker.internal:11434/instead of: arduinoCopyEdithttp://localhost:11434/

Understanding YAML & .env

The docker-compose.yml defines:

- Which Docker images to include

- How they’re configured

The .env file stores environment variables. You’ll rarely need major changes here if you use the provided configuration.

Updating the Starter Kit

Updating requires recreating the container after pulling the latest image:

Update Instructions

The easiest method:

Using Docker Compose

Email Inbox for Newsletters

IMAP is the simplest option. I first tried Gmail, but Google’s complex authorization process made it impractical. An IMAP account is much easier.

Notion and LinkedIn Integration

Follow n8n’s official guides:

Once connected, n8n offers “Test” buttons to verify credentials, making setup straightforward.

Prepare your Notion database with:

- FROM field (email sender)

- DATE field (newsletter date)

- GLANCE field (short summary for quick viewing)

Why Two Workflows?

n8n workflows run continuously when deployed online, triggered by nodes like IMAP (for new emails).

- Workflow 1: Fetch emails → Summarize → Store in Notion

- Workflow 2: Pull summaries from Notion → Compile LinkedIn post → Publish

Since I ran this locally in batch mode, I triggered nodes manually, which required adding filter nodes to avoid duplicates. In a live setup, both workflows would run independently with different intervals (e.g., every hour vs. once per day).

Fascinating to see how gaming evolved in the Philippines! The accessibility of platforms like SuperPH11, with options like superph11 download, really reflects that growth. It’s interesting how legit platforms are prioritizing user experience now!